AI Integration

Practical AI integration — document verification, intelligent search, automated reporting, and AI-augmented workflows using Claude, GPT, and custom models.

AI That Delivers Real Business Value

We integrate AI where it genuinely adds value — not as a buzzword, but as a practical tool that makes your application smarter, faster, or more capable. From cloud API integration to fully self-hosted AI infrastructure trained on your own data.

Our approach is pragmatic: we use the right model for the task, we build robust fallbacks for when AI is uncertain, and we never put AI on a critical path without human oversight where it matters.

Critically, we don't just call cloud APIs. We've built our own self-hosted AI infrastructure — locally run models trained on client-specific data. This means your sensitive information never leaves your environment, and the AI understands your domain because it's been trained on your actual workflows.

Self-Hosted AI Infrastructure

Most agencies offering "AI integration" are wrapping cloud API calls in a nice UI. We go further — locally deployed models running on dedicated hardware, trained and fine-tuned on domain-specific data.

Data Sovereignty

Sensitive data never leaves your infrastructure. No third-party data processing agreements to negotiate. No risk of training data being used to improve someone else's model.

Domain-Specific Training

A model trained on your data knows your domain deeply. General-purpose models know a bit about everything — fine-tuned models know a lot about what matters to you.

Predictable Costs

Cloud API pricing scales with every request. Self-hosted models have a fixed infrastructure cost — process 100 or 100,000 requests for the same price.

Zero Latency Dependency

No reliance on external API availability. Your AI continues to function even when cloud providers have outages. Essential for mission-critical systems.

Full Control

You control the model version, fine-tuning data, system prompts, output formatting, and upgrade schedule. No surprise changes, no deprecated endpoints.

Regulatory Compliance

For NHS systems, government platforms, and regulated industries, keeping AI processing on-premises or within controlled environments can be a hard requirement.

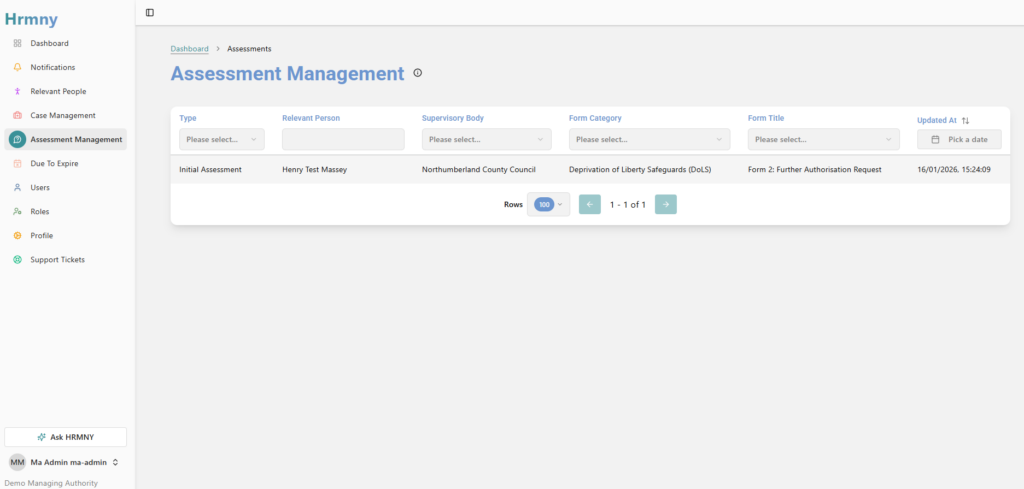

In Production: Ask HRMNY

Our flagship self-hosted AI deployment — a domain-specific assistant built into a statutory health and social care assessment platform.

HRMNY is a multi-role referral and assessment platform we built for managing Deprivation of Liberty Safeguards (DoLS), Care Act assessments, and mental capacity evaluations. It handles some of the most sensitive data imaginable: detailed assessments of vulnerable adults, clinical observations, legal capacity determinations, and safeguarding information.

Sending any of this to a third-party AI provider would be a non-starter — both ethically and legally. So we built "Ask HRMNY", a self-hosted AI assistant trained specifically on adult care legislation, DoLS procedures, Mental Capacity Act frameworks, and assessment completion standards.

The AI provides field-level quality assurance on assessment documentation in real time. When an assessor is completing a DoLS form, Ask HRMNY flags where statutory language is missing, where reasoning doesn't meet the legal threshold, or where additional evidence is needed — all without any patient data ever leaving the infrastructure.

The Honest Trade-Offs

Self-hosted AI is powerful, but it's not free of trade-offs. We believe in giving clients the full picture.

Infrastructure Investment

Self-hosted AI requires GPU hardware, maintenance, and expertise. The upfront cost is higher than a cloud API key — but for high-volume applications, the economics tilt in your favour within months.

Model Capability Ceiling

The largest frontier models require massive compute to run locally. Self-hosted models are typically smaller and more focused — excellent at their trained domain, but less capable at general knowledge tasks.

Ongoing Maintenance

Models need retraining as your data evolves. Infrastructure needs monitoring, scaling, and security patching. This is an ongoing commitment, not a deploy-and-forget situation.

Development Complexity

Building a self-hosted AI pipeline involves model selection, fine-tuning, vector databases, embedding pipelines, and inference optimisation. The engineering investment is higher — but so is the control.

Our Recommendation: Hybrid

We rarely recommend pure self-hosted or pure cloud. The smartest approach is hybrid: self-hosted models for sensitive, domain-specific tasks where data privacy and specialisation matter — cloud APIs for general-purpose tasks where frontier models genuinely outperform. This isn't a compromise. It's using the right tool for each specific job.

What We've Built

Real AI deployments in production systems — not demos or proof-of-concepts.

AI Document Verification

For the Ad-MOTO Portal — Claude API integration to automatically verify rider documents. Extracts key fields, cross-references against profile data, assigns confidence scores, and flags potential fraud. Auto-approves above threshold; human review queue for the rest.

Natural Language Reporting

For Ad-MOTO Hub — campaign managers ask questions in plain English and receive generated reports with visualisations. No SQL knowledge required to interrogate complex advertising performance data.

Intelligent Meeting Notes

In our CRM platform — users paste rough meeting notes and the AI structures them into standardised contact notes, extracts follow-up actions, and suggests record updates. The feature users mention most.

AI-Powered Chatbots

Context-aware support chatbots that understand your application's domain and answer questions by querying your actual data — not just regurgitating FAQ content.

Our AI Stack

- Self-Hosted Models — Locally deployed, fine-tuned models for domain-specific tasks. Running on dedicated GPU infrastructure with full data sovereignty.

- Claude API (Anthropic) — Our primary cloud model for document analysis, content generation, and complex reasoning. Consistently the most reliable for structured data extraction.

- OpenAI GPT — Used where specific capabilities align better, particularly for embedding generation and code-adjacent operations.

- RAG (Retrieval-Augmented Generation) — Vector search pipelines that give AI models access to your data without fine-tuning. Answer questions about specific datasets with precision.

- Laravel Queue Integration — AI operations as background jobs with retry logic, timeout handling, and graceful fallback paths. API failures never block the user.

AI-Augmented Development

Beyond integrating AI into client applications, we use AI tools extensively in our own development process. Claude Code and Cursor are core parts of our workflow — accelerating implementation by 3-5x while maintaining quality through rigorous human review of every AI-generated output.

This isn't vibe coding. We call it vibe engineering: experienced developers direct AI with architectural discipline. The AI writes the implementation. The human ensures it's correct, secure, and maintainable.

When AI Is (and Isn't) the Answer

We'll tell you honestly if AI is the right solution. Sometimes a well-designed database query, a set of business rules, or a simple integration does the job better, cheaper, and more reliably. We recommend AI when it provides capabilities traditional approaches genuinely can't match — and when it is the answer, we'll tell you whether you need cloud, self-hosted, or both.

Exploring AI for your project?

Whether you need a cloud API integration, a self-hosted AI system for sensitive data, or just want an honest conversation about what AI can and can't do for your business — we're happy to talk.

Book a Consultation